There are many FAQ available about SRM installation and configuration . One of them which I like is

http://www.yellow-bricks.com/srm-faq/

I would like to share my own personal experience. On Saturday 21st of Nov 2009 I have tested live DR scenario for our customer readiness .

This involved 26 Key application of our customer running across 17 Physical Database server and 45 virtual machines. We have excluded AD/Exchange. For exchange we used EMS system from Dell.

For SRM setup I used

1. 4 ESX host running on DL380 G5 with few of them has installed Qlogic HBA and other used S/W ISCSI.

2. 4 ESX host were installed with ESX3.5 U4 and both side I was running VC2.5 U5.

3. All the 4 ESX host were configured for HA/DRS.

4. Unfortunately all the 45 VM were spread across 33 lun. I could have consolidated for the SRM prospective.

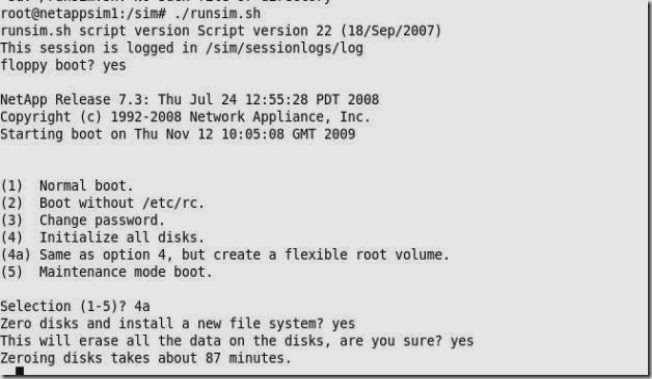

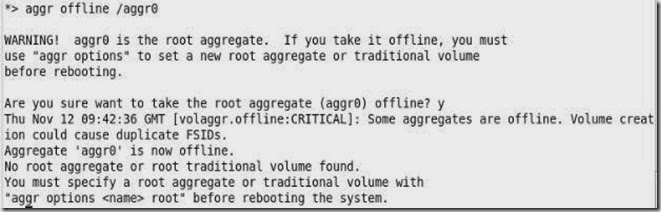

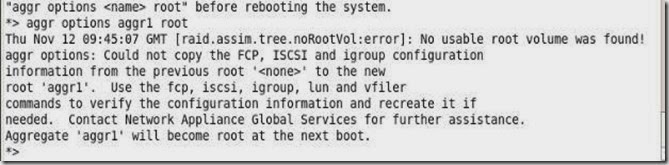

5. For this purpose I have used SRMV1.0.1 with Patch 1. We are using NetAPP as our storage solution.

6. To save bandwidth we have kept protected and recovery filer at same location . Replicated and then ship it across to DR location. This will help us to save bandwidth for initial replication as we are dependent on incremental replication.

7. We are using “Stretch VLAN “ so that we don’t have to re-ip the machine when it recovered at recovery site.

SRM setup and configuration:

1. Create a service account for SRM ,which will be used for setup SQL database for SRM and will be used during the installation of SRM

2.Install SRM database at each of the Virtual Center on SQL server. I configured DSN prior though during the SRM setup it will prompt.

3.Install all the SRM patch for 1.0 and then install SRA(Storage Replication Adaptor). This Adaptor comes from the vendor whose storage solution you are using. You can download from VMware site only if you login to their account or else they don’t allow open download. In my case I used NetAPP SRA.

4. Once both site Protected Site and Recovery Site had the all the component installed we will start pairing the site. At Recovery site we need to provide Protected site IP and at Protected site we need to provide Recovery Site ip so that it can be paired. Use service account to pair the site.

5.Once the site is paired then we will need configure SRA for Protected site and Recovery site. Before this being configured we should insure that replication is completed . Check with storage admin, or else we can not proceed further with configuration. This replicated also help us to create a bubble VM at recovery site.

Once it is confirmed that replication is over then configure the Array by running “Configure Array Managers” wizard.

a. For Protected site supply the IP address of the NetAPP filer. Ensure that you uses root or root like credential to authenticate. You must see replicated Array Pairs. If you have many filer which has lun/volume spread across then use the add button to add all those filer

b. Then we will configuring the same for Recovery site. Which all filer are paired ,insure that you add all the pair filer at the recovery site as well. Use the root/root like credential to add it . You can keep adding all the recovery site filer IP and then you will see green icon which states that Array has been paired properly

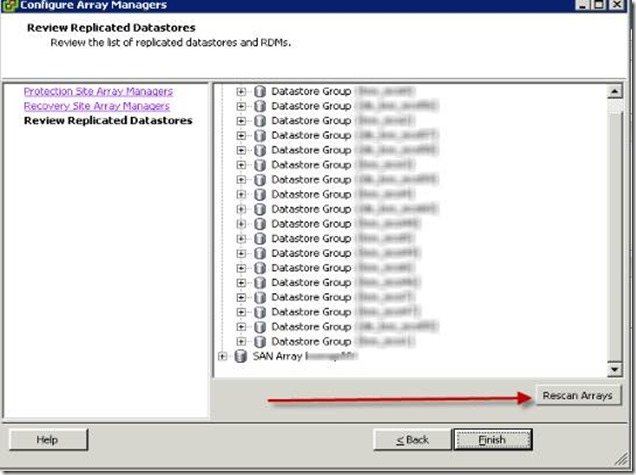

c. Once Protected Site and Recovery Site has been paired using replication array then we can find all the replicated datastores. If not

visible then rescan the array

After setup at Protected Site we need to do the same at Recovery Site. Protected Site and Recovery Site IP will be remain as we configured at Protected Site. You won’t be able to see Replicated Datastores at the Recovery Site as we are not configuring two way recovery site.

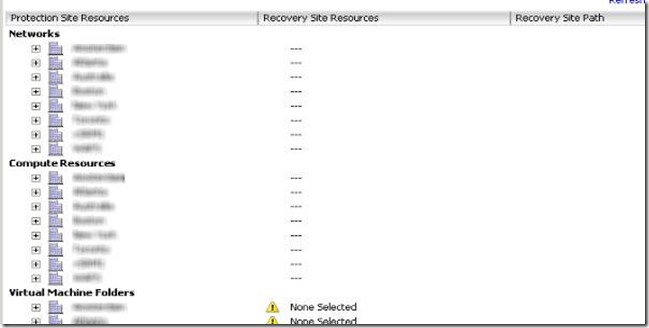

6. Now we need to configure the Inventory mapping at “Protected Site” .

This is very crucial and critical setup at Protected Site. Before we do inventories mapping we should consolidate all the VM into single folder by name something like SRM at Protected site. Create similar folder at Recovery site so that we can have one to one mapping. We also need to make sure that if we are doing mapping for “Computer Resource” for Cluster host . It should have HA/DRS enabled or it will NOT allow to create bubble VM.

Assuming that we are using stretched VLAN and we have those VLAN created at Recovery Site.

This step is not required at Recovery Site.

7. We now need to create Protection Group at Protected site and does not require at Recovery site.

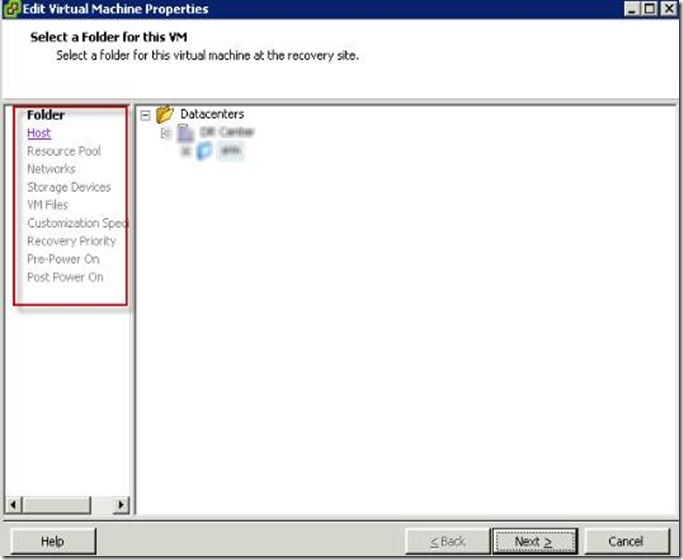

This protection group based each individual LUN and which VM’s on those lun needs to be protected. One LUN can be part of one protected group and cannot be mapped to any other protection group. Hence it is very important we need to classify the lun based on HIGH/NORMAL/LOW categories. Similarly we can plan for mapping. If we have done inventory mapping correctly then status will be shown as configured for protection

We can also run through wizard for configuring for each VM. Here we can configure for priories of startup as low/normal/high based on which VM should be started first.

If the replication is over then you can also see the status on Storage devices.

Also make a note that at Protected Site there should not any CD-ROM connection or Floppy connected or else automatic configuration will fail.

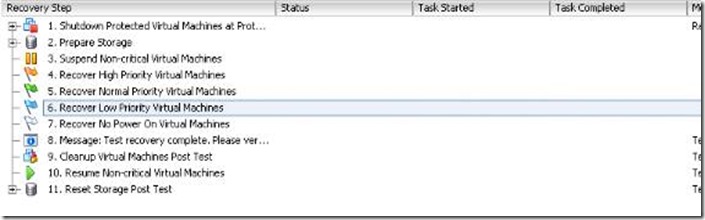

8. Once we have all the steps configured as above we can start with setup at of Recovery Plan @Recovery site. This recovery plan can be run in two mode test and recovery mode. Test mode is to insure that you actual recovery run as per requirement. This runs using flex Clone (Incase of NetAPP storage)lun and recovery bubble network. We need to ensure license for FlexClone at Recovery site filer. These test does not impact the Production system. After the test all the VM’s are powered off and LUN’s are resyched. Here we can move the VM in the priorities list to make sure it start first and then next VM and so on .

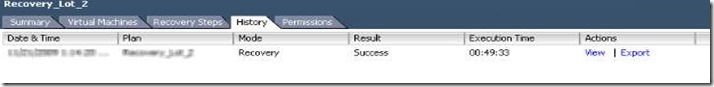

9. In the actual recovery mode it does attach the lun and then start powering on VM from high to low. Once this is overy you can export the report by clicking history